Maria Teleki

Howdy! I’m a 4th year PhD Student in Computer Science at Texas A&M University (gig em!), advised by Prof. James Caverlee.

My research rethinks spoken language understanding by modeling disfluency, spontaneity, and variability as fundamental features of human communication. I aim to develop next-generation conversational AI that thrives under real-world conditions — systems that generalize across speakers, domains, and contexts to power scalable, speech-centric applications in information access, recommendation, and decision support.

Robust Speech Understanding in Natural Communication

Human speech is spontaneous and messy -- people pause, restart, hedge, and change course mid-sentence. These spontaneous phenomena cause significant drops in the performance. I build models and evaluation frameworks that treat these phenomena as core properties of communication, enabling speech technologies to perform reliably under these real-world conditions.

|

Comparing ASR Systems in the Context of Speech Disfluencies Maria Teleki, Xiangjue Dong, Soohwan Kim, and James Caverlee INTERSPEECH 2024

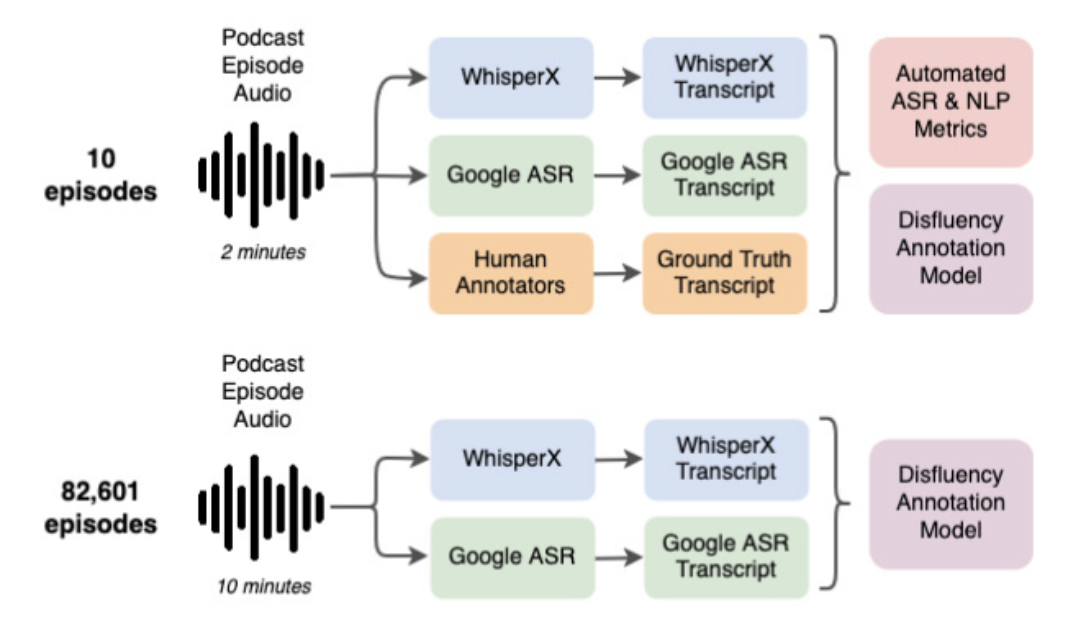

In this work, we evaluate the disfluency capabilities of two automatic speech recognition systems -- Google ASR and WhisperX -- through a study of 10 human-annotated podcast episodes and a larger set of 82,601 podcast episodes. We employ a state-of-the-art disfluency annotation model to perform a fine-grained analysis of the disfluencies in both the scripted and non-scripted podcasts. We find, on the set of 10 podcasts, that while WhisperX overall tends to perform better, Google ASR outperforms in WIL and BLEU scores for non-scripted podcasts. We also find that Google ASR's transcripts tend to contain closer to the ground truth number of edited-type disfluent nodes, while WhisperX's transcripts are closer for interjection-type disfluent nodes. This same pattern is present in the larger set. Our findings have implications for the choice of an ASR model when building a larger system, as the choice should be made depending on the distribution of disfluent nodes present in the data.

@inproceedings{teleki24_interspeech,

|

|

Z-Scores: A Metric for Linguistically Assessing Disfluency Removal Maria Teleki, Sai Janjur, Haoran Liu, Oliver Grabner, Ketan Verma, Thomas Docog, Xiangjue Dong, Lingfeng Shi, Cong Wang, Stephanie Birkelbach, Jason Kim, Yin Zhang, James Caverlee arXiv 2025

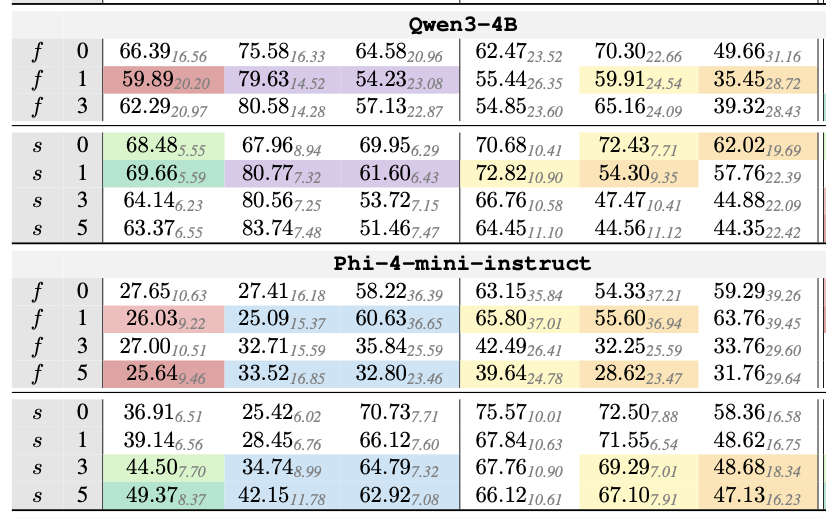

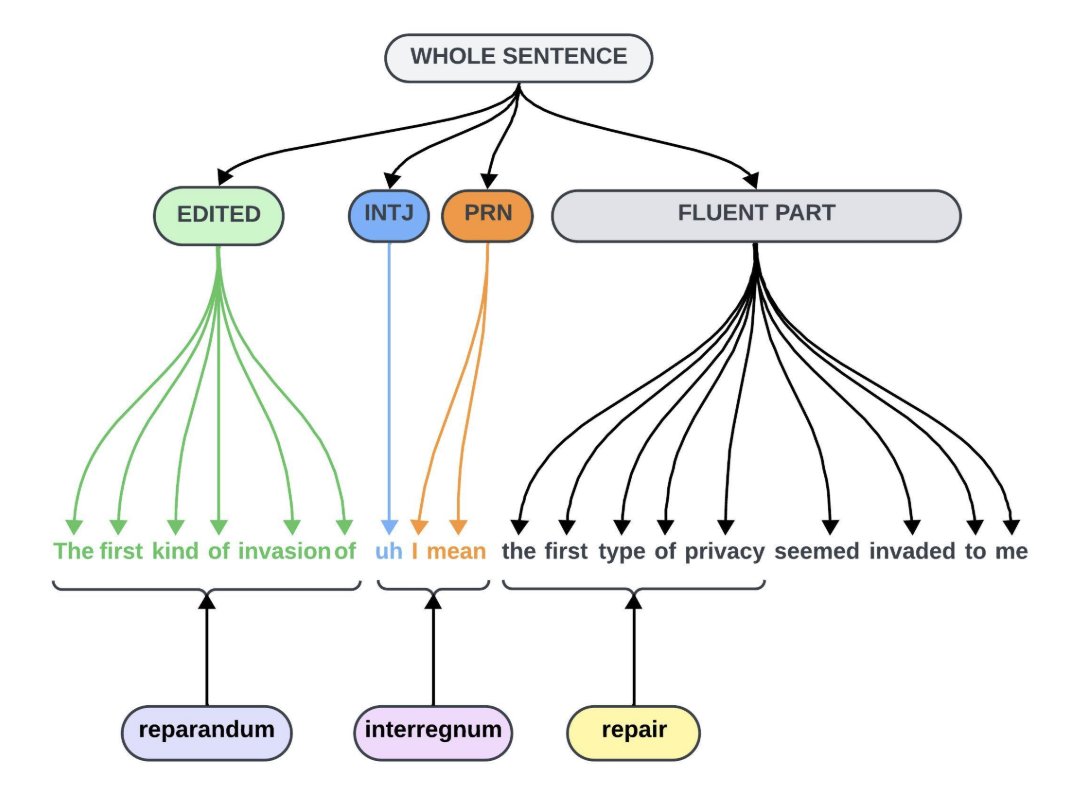

Evaluating disfluency removal in speech requires more than aggregate token-level scores. Traditional word-based metrics such as precision, recall, and F1 (E-Scores) capture overall performance but cannot reveal why models succeed or fail. We introduce Z-Scores, a span-level linguistically-grounded evaluation metric that categorizes system behavior across distinct disfluency types (EDITED, INTJ, PRN). Our deterministic alignment module enables robust mapping between generated text and disfluent transcripts, allowing Z-Scores to expose systematic weaknesses that word-level metrics obscure. By providing category-specific diagnostics, Z-Scores enable researchers to identify model failure modes and design targeted interventions -- such as tailored prompts or data augmentation -- yielding measurable performance improvements. A case study with LLMs shows that Z-scores uncover challenges with INTJ and PRN disfluencies hidden in aggregate F1, directly informing model refinement strategies.

@inproceedings{teleki25_zscores,

|

|

DRES: Benchmarking LLMs for Disfluency Removal Maria Teleki, Sai Janjur, Haoran Liu, Oliver Grabner, Ketan Verma, Thomas Docog, Xiangjue Dong, Lingfeng Shi, Cong Wang, Stephanie Birkelbach, Jason Kim, Yin Zhang, James Caverlee arXiv 2025

Disfluencies -- such as 'um,' 'uh,' interjections, parentheticals, and edited statements -- remain a persistent challenge for speech-driven systems, degrading accuracy in command interpretation, summarization, and conversational agents. We introduce DRES (Disfluency Removal Evaluation Suite), a controlled text-level benchmark that establishes a reproducible semantic upper bound for this task. DRES builds on human-annotated Switchboard transcripts, isolating disfluency removal from ASR errors and acoustic variability. We systematically evaluate proprietary and open-source LLMs across scales, prompting strategies, and architectures. Our results reveal that (i) simple segmentation consistently improves performance, even for long-context models; (ii) reasoning-oriented models tend to over-delete fluent tokens; and (iii) fine-tuning achieves near state-of-the-art precision and recall but harms generalization abilities. We further present a set of LLM-specific error modes and offer nine practical recommendations (R1-R9) for deploying disfluency removal in speech-driven pipelines. DRES provides a reproducible, model-agnostic foundation for advancing robust spoken-language systems.

@inproceedings{teleki25_dres,

|

|

Quantifying the Impact of Disfluency on Spoken Content Summarization Maria Teleki, Xiangjue Dong, and James Caverlee LREC-COLING 2024

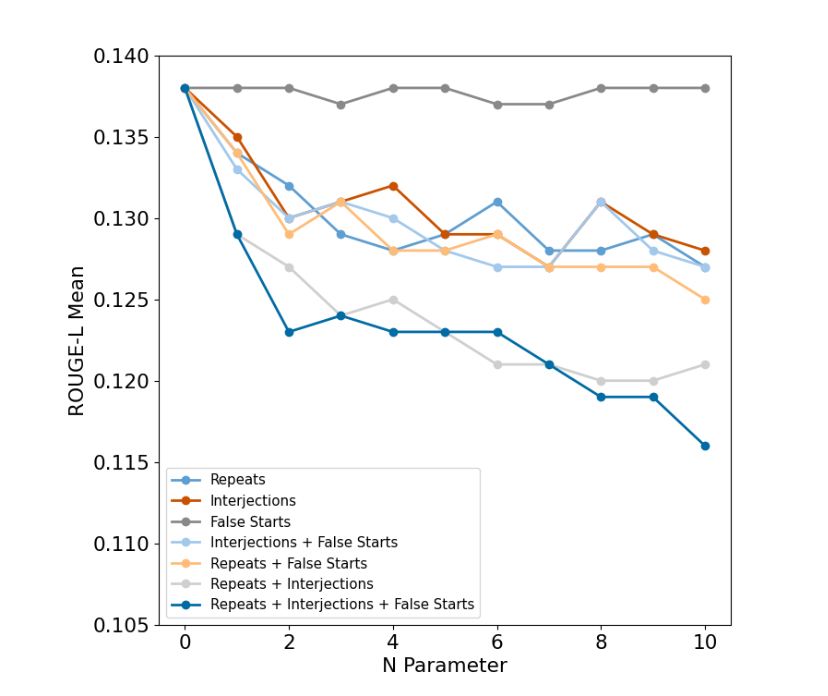

Spoken content is abundant -- including podcasts, meeting transcripts, and TikTok-like short videos. And yet, many important tasks like summarization are often designed for written content rather than the looser, noiser, and more disfluent style of spoken content. Hence, we aim in this paper to quantify the impact of disfluency on spoken content summarization. Do disfluencies negatively impact the quality of summaries generated by existing approaches? And if so, to what degree? Coupled with these goals, we also investigate two methods towards improving summarization in the presence of such disfluencies. We find that summarization quality does degrade with an increase in these disfluencies and that a combination of multiple disfluency types leads to even greater degradation. Further, our experimental results show that naively removing disfluencies and augmenting with special tags can worsen the summarization when used for testing, but that removing disfluencies for fine-tuning yields the best results. We make the code available at https://github.com/mariateleki/Quantifying-Impact-Disfluency.

@inproceedings{teleki-etal-2024-quantifying-impact,

|

|

DACL: Disfluency Augmented Curriculum Learning for Fluent Text Generation Rohan Chaudhury, Maria Teleki, Xiangjue Dong, and James Caverlee LREC-COLING 2024

Voice-driven software systems are in abundance. However, language models that power these systems are traditionally trained on fluent, written text corpora. Hence there can be a misalignment between the inherent disfluency of transcribed spoken content and the fluency of the written training data. Furthermore, gold-standard disfluency annotations of various complexities for incremental training can be expensive to collect. So, we propose in this paper a Disfluency Augmented Curriculum Learning (DACL) approach to tackle the complex structure of disfluent sentences and generate fluent texts from them, by using Curriculum Learning (CL) coupled with our synthetically augmented disfluent texts of various levels. DACL harnesses the tiered structure of our generated synthetic disfluent data using CL, by training the model on basic samples (i.e. more fluent) first before training it on more complex samples (i.e. more disfluent). In contrast to the random data exposure paradigm, DACL focuses on a simple-to-complex learning process. We comprehensively evaluate DACL on Switchboard Penn Treebank-3 and compare it to the state-of-the-art disfluency removal models. Our model surpasses existing techniques in word-based precision (by up to 1%) and has shown favorable recall and F1 scores.

@inproceedings{chaudhury-etal-2024-dacl-disfluency,

|

Modeling Variability in Spoken Language Technologies

Speech AI must work across contexts, domains, and communication styles -- from different speakers and linguistic backgrounds to podcasts, casual dialogue, and specialized settings. I study how models behave under this variability and design methods that improve generalization across settings.

|

Masculine Defaults via Gendered Discourse in Podcasts and Large Language Models Maria Teleki, Xiangjue Dong, Haoran Liu, and James Caverlee ICWSM 2025 Presented at: IC2S2 2025, SICon@ACL 2025

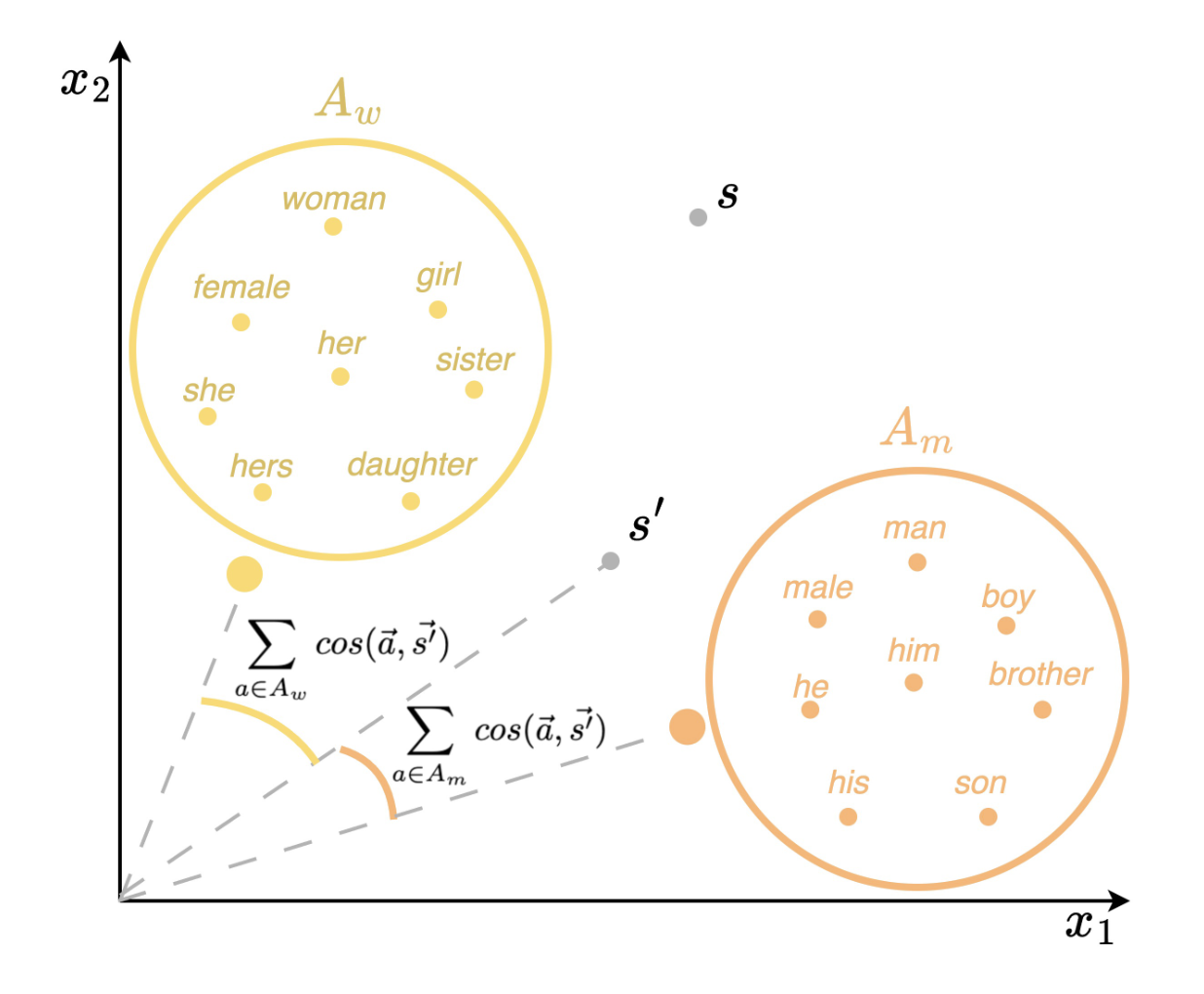

Masculine defaults are widely recognized as a significant type of gender bias, but they are often unseen as they are under-researched. Masculine defaults involve three key parts: (i) the cultural context, (ii) the masculine characteristics or behaviors, and (iii) the reward for, or simply acceptance of, those masculine characteristics or behaviors. In this work, we study discourse-based masculine defaults, and propose a twofold framework for (i) the large-scale discovery and analysis of gendered discourse words in spoken content via our Gendered Discourse Correlation Framework (GDCF); and (ii) the measurement of the gender bias associated with these gendered discourse words in LLMs via our Discourse Word-Embedding Association Test (D-WEAT). We focus our study on podcasts, a popular and growing form of social media, analyzing 15,117 podcast episodes. We analyze correlations between gender and discourse words -- discovered via LDA and BERTopic -- to automatically form gendered discourse word lists. We then study the prevalence of these gendered discourse words in domain-specific contexts, and find that gendered discourse-based masculine defaults exist in the domains of business, technology/politics, and video games. Next, we study the representation of these gendered discourse words from a state-of-the-art LLM embedding model from OpenAI, and find that the masculine discourse words have a more stable and robust representation than the feminine discourse words, which may result in better system performance on downstream tasks for men. Hence, men are rewarded for their discourse patterns with better system performance by one of the state-of-the-art language models -- and this embedding disparity is a representational harm and a masculine default.

@inproceedings{teleki25_icwsm,

|

|

CHOIR: Collaborative Harmonization fOr Inference Robustness Xiangjue Dong, Cong Wang, Maria Teleki, Millenium Bismay, and James Caverlee arXiv 2025

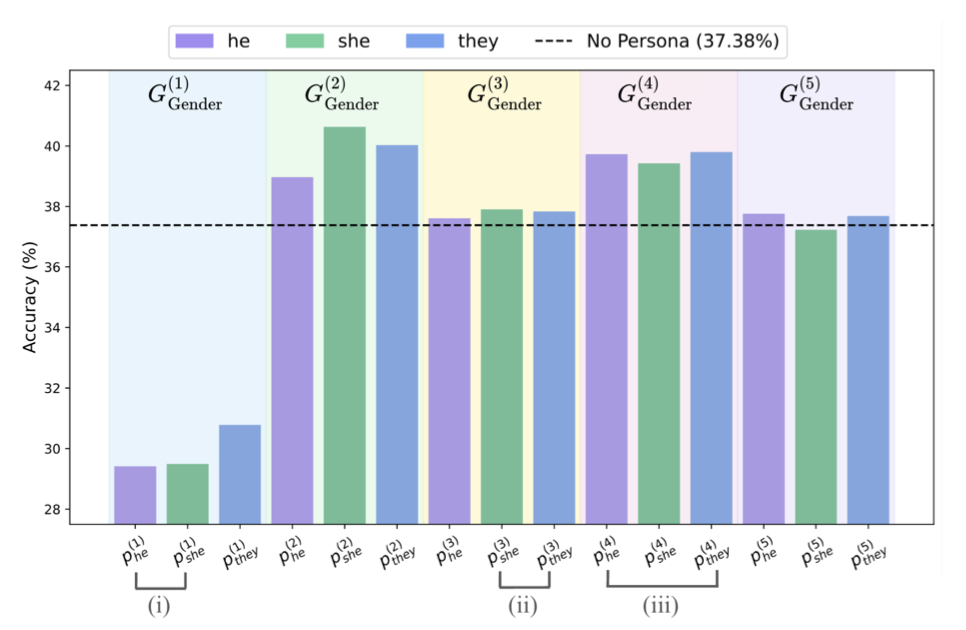

Persona-assigned Large Language Models (LLMs) can adopt diverse roles, enabling personalized and context-aware reasoning. However, even minor demographic perturbations in personas, such as simple pronoun changes, can alter reasoning trajectories, leading to divergent sets of correct answers. Instead of treating these variations as biases to be mitigated, we explore their potential as a constructive resource to improve reasoning robustness. We propose CHOIR (Collaborative Harmonization fOr Inference Robustness), a test-time framework that harmonizes multiple persona-conditioned reasoning signals into a unified prediction. CHOIR orchestrates a collaborative decoding process among counterfactual personas, dynamically balancing agreement and divergence in their reasoning paths. Experiments on various reasoning benchmarks demonstrate that CHOIR consistently enhances performance across demographics, model architectures, scales, and tasks - without additional training. Improvements reach up to 26.4% for individual demographic groups and 19.2% on average across five demographics. It remains effective even when base personas are suboptimal. By reframing persona variation as a constructive signal, CHOIR provides a scalable and generalizable approach to more reliable LLM reasoning.

@inproceedings{dong25_choir,

|

|

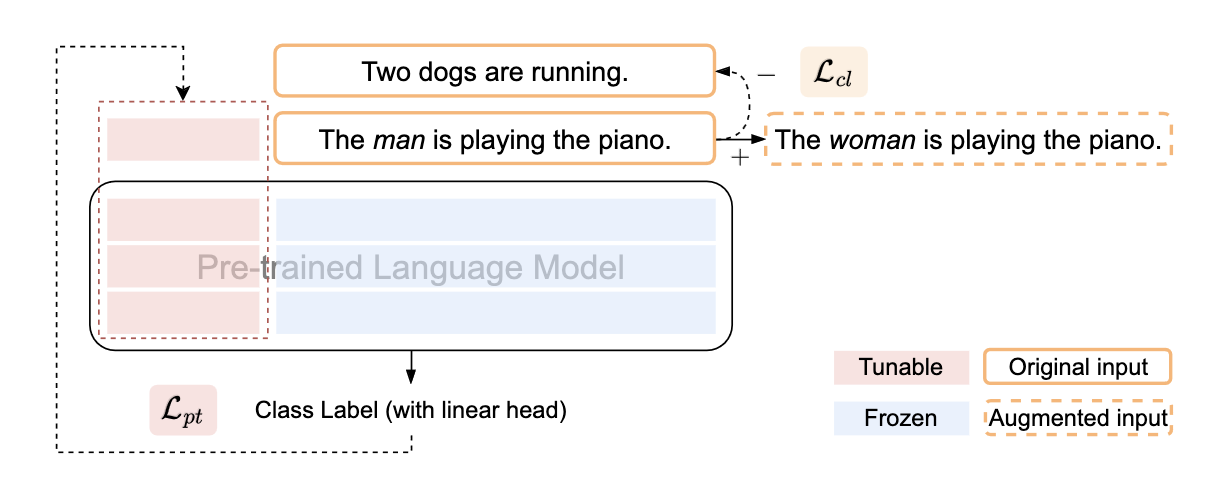

Co2PT: Mitigating Bias in Pre-trained Language Models through Counterfactual Contrastive Prompt Tuning Xiangjue Dong, Ziwei Zhu, Zhuoer Wang, Maria Teleki, and James Caverlee EMNLP Findings 2023

Pre-trained Language Models are widely used in many important real-world applications. However, recent studies show that these models can encode social biases from large pre-training corpora and even amplify biases in downstream applications. To address this challenge, we propose Co2PT, an efficient and effective debias-while-prompt tuning method for mitigating biases via counterfactual contrastive prompt tuning on downstream tasks. Our experiments conducted on three extrinsic bias benchmarks demonstrate the effectiveness of Co2PT on bias mitigation during the prompt tuning process and its adaptability to existing upstream debiased language models. These findings indicate the strength of Co2PT and provide promising avenues for further enhancement in bias mitigation on downstream tasks.

@inproceedings{dong-etal-2023-co2pt,

|

Conversational AI in Noisy, Real-World Settings

Most conversational AI is built for idealized, text-based input -- far from how people actually interact. I design models and evaluation approaches that handle spoken, noisy, and context-rich dialogue, advancing conversational systems that bridge speech and language in practical applications.

|

I want a horror -- comedy -- movie: Slips-of-the-Tongue Impact Conversational Recommender System Performance Maria Teleki, Lingfeng Shi, Chengkai Liu, and James Caverlee INTERSPEECH 2025

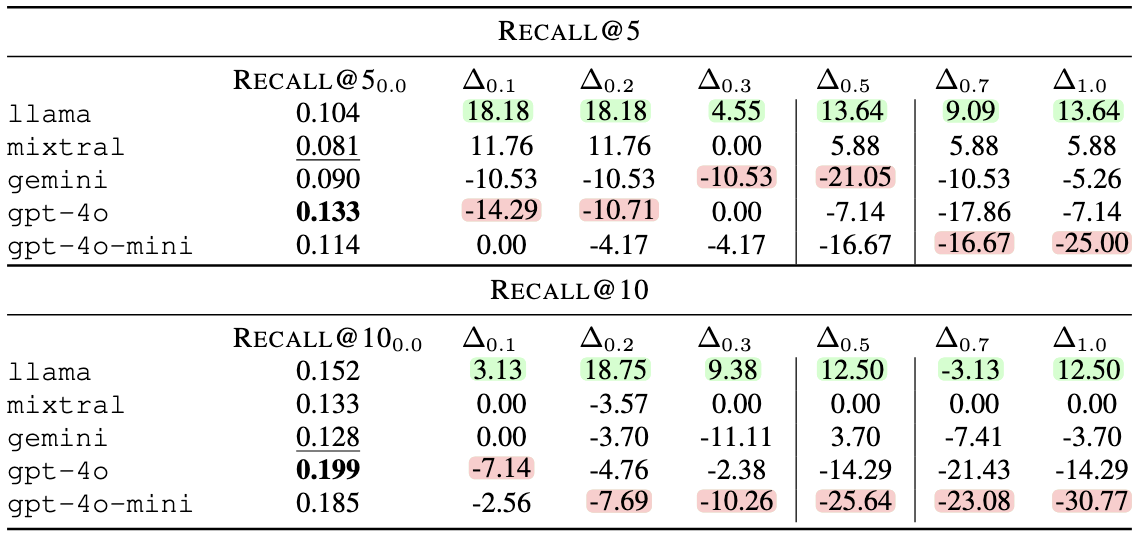

Disfluencies are a characteristic of speech. We focus on the impact of a specific class of disfluency -- whole-word speech substitution errors (WSSE) -- on LLM-based conversational recommender system performance. We develop Syn-WSSE, a psycholinguistically-grounded framework for synthetically creating genre-based WSSE at varying ratios to study their impact on conversational recommender system performance. We find that LLMs are impacted differently: llama and mixtral have improved performance in the presence of these errors, while gemini, gpt-4o, and gpt-4o-mini have deteriorated performance. We hypothesize that this difference in model resiliency is due to differences in the pre- and post-training methods and data, and that the increased performance is due to the introduced genre diversity. Our findings indicate the importance of a careful choice of LLM for these systems, and more broadly, that disfluencies must be carefully designed for as they can have unforeseen impacts.

@inproceedings{teleki25_horror,

|

|

Howdy Y’all: An Alexa TaskBot Majid Alfifi, Xiangjue Dong, Timo Feldman, Allen Lin, Karthic Madanagopal, Aditya Pethe, Maria Teleki, Zhuoer Wang, Ziwei Zhu, James Caverlee Alexa Prize TaskBot Challenge Proceedings 2022

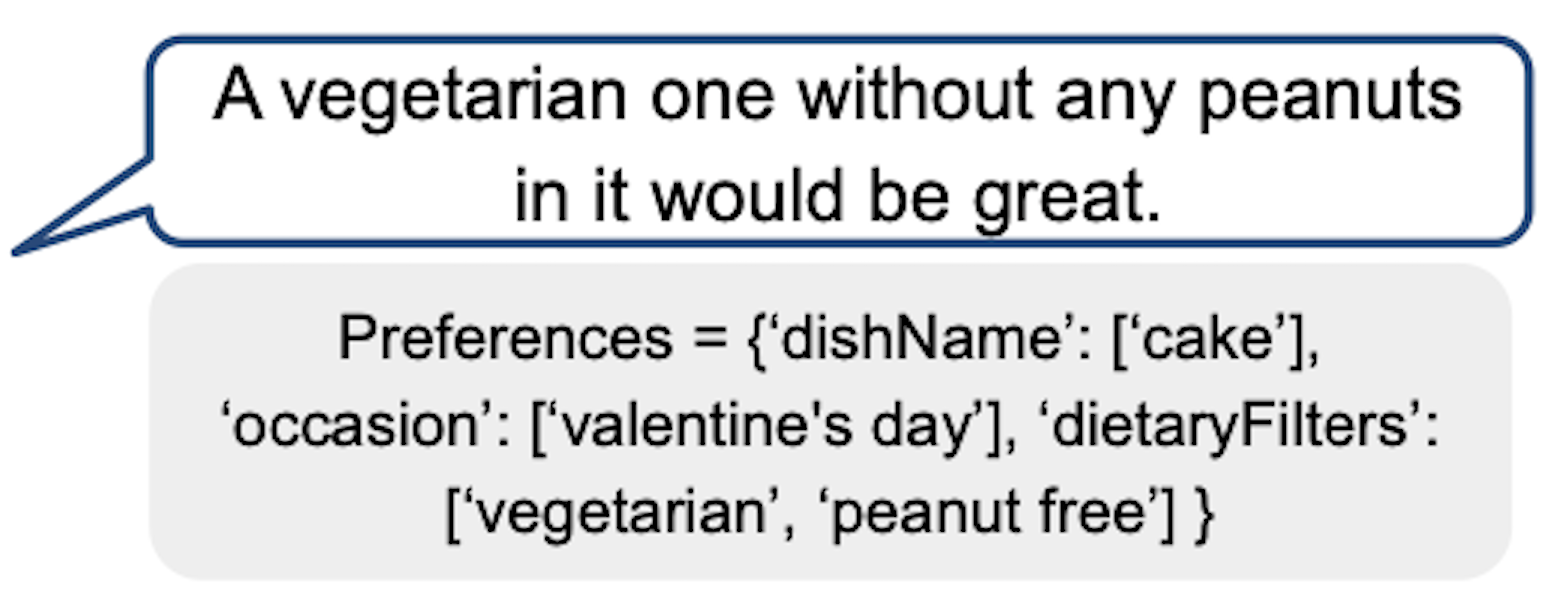

In this paper, we present Howdy Y’all, a multi-modal task-oriented dialogue agent developed for the 2021-2022 Alexa Prize TaskBot competition. Our design principles guiding Howdy Y’all aim for high user satisfaction through friendly and trustworthy encounters, minimization of negative conversation edge cases, and wide coverage over many tasks. Hence, Howdy Y’all is built upon a rapid prototyping platform to enable fast experimentation and powered by four key innovations to enable this vision: (i) First, it combines a rules, phonetic matching, and a transformer-based approach for robust intent understanding. (ii) Second, to accurately elicit user preferences and guide users to the right task, Howdy Y’all is powered by a contrastive learning search framework over sentence embeddings and a conversational recommender for eliciting preferences. (iii) Third, to support a variety of user question types, it introduces a new data augmentation method for question generation and a self-supervised answer selection approach for improving question answering. (iv) Finally, to help motivate our users and keep them engaged, we design an emotional conversation tracker that provides empathetic responses to keep users engaged and a monitor of conversation quality.

@inproceedings{University2022,

|

Synthesis Contributions

I also contribute conceptual and survey work that maps emerging trends in language technology. These efforts synthesize fast-moving developments and provide frameworks that guide future research on speech, conversation, and language understanding.

|

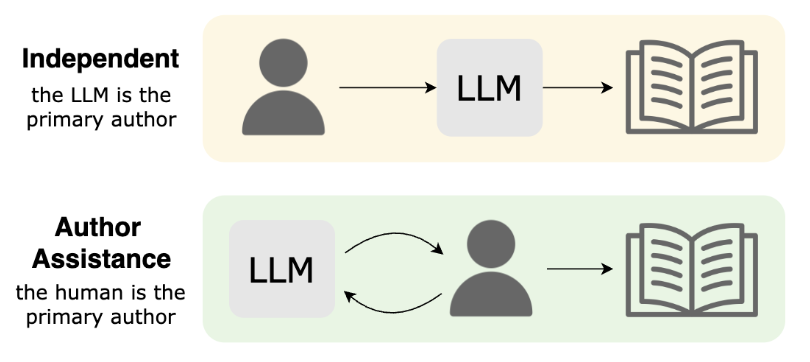

A Survey on LLMs for Story Generation Maria Teleki, Vedangi Bengali*, Xiangjue Dong*, Sai Tejas Janjur*, Haoran Liu*, Tian Liu, Cong Wang, Ting Liu, Yin Zhang, Frank Shipman, James Caverlee EMNLP Findings 2025

Methods for story generation with Large Language Models (LLMs) have come into the spotlight recently. We create a novel taxonomy of LLMs for story generation consisting of two major paradigms: (i) independent story generation by an LLM, and (ii) author-assistance for story generation -- a collaborative approach with LLMs supporting human authors. We compare existing works based on their methodology, datasets, generated story types, evaluation methods, and LLM usage. With a comprehensive survey, we identify potential directions for future work.

@inproceedings{teleki25_survey,

|

|

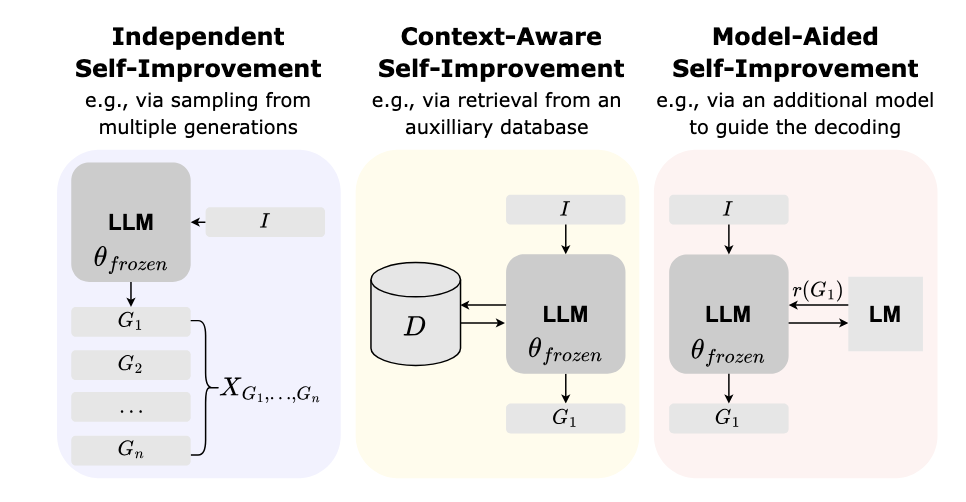

A Survey on LLM Inference-Time Self-Improvement Xiangjue Dong,* Maria Teleki,* and James Caverlee arXiv 2024

Techniques that enhance inference through increased computation at test-time have recently gained attention. In this survey, we investigate the current state of LLM Inference-Time Self-Improvement from three different perspectives: Independent Self-improvement, focusing on enhancements via decoding or sampling methods; Context-Aware Self-Improvement, leveraging additional context or datastore; and Model-Aided Self-Improvement, achieving improvement through model collaboration. We provide a comprehensive review of recent relevant studies, contribute an in-depth taxonomy, and discuss challenges and limitations, offering insights for future research.

@inproceedings{dong24_survey,

|

Future Vision

The future of conversational AI depends on how well we can model the realities of human communication -- speech that is spontaneous, disfluent, context-dependent, and constantly evolving. Rather than treating these characteristics as noise to be removed, my research views them as rich signals to be modeled, understood, and leveraged.

I aim to develop speech-first models and evaluation frameworks that are:

- Robust to disfluencies, revisions, and spontaneous speech,

- Reliable in real-world, noisy, and context-rich environments, and

- Generalizable across diverse speakers, domains, and communication styles.

By centering variability as a core research challenge, my work lays the scientific and methodological foundation for next-generation conversational systems -- systems that perform reliably outside the lab, adapt across settings, and power applications ranging from information access to decision support.

Education

| (2022 - Present) | PhD Computer Science at Texas A&M University |

| (2017 - 2022) | B.S. Computer Science at Texas A&M University -- Summa Cum Laude |

Service

|

Workshop Organizer for Speech AI for All: The What, How, and Who of Measurement Kimi V. Wenzel, Alisha Pradhan, Maria Teleki, Tobias Weinberg, Robin Netzorg, Alyssa Hillary Zisk, Anna Seo Gyeong Choi, Jingjin Li, Raja Kushalnagar, Colin Lea, Abraham Glasser, Christian Vogler, Nan Bernstein Ratner, Ly Xīnzhèn M. Zhǎngsūn Brown, Allison Koenecke, Karen Nakamura, Shaomei Wu CHI 2026

Optimized for ``typical'' and fluent speech, today's speech AI systems perform poorly for people with speech diversities, sometimes to an unusable or even harmful degree. These harms play out in daily life through household voice assistants and workplace meeting services, in higher stakes scenarios like medical transcription, and in emerging applications of AI in augmentative and alternative communication. Standard metrics aiming to quantify these inequities, however, fail to comprehensively understand the impact of speech AI on diverse user groups, and furthermore do not easily generalize to newer speech language and speech generation models. To address these social inequities and measurement limitations, this workshop brings academics, practitioners, and non-profit workers together in proactive dialogue to improve measurement of speech AI performance and user impact. Through a poster session and breakout group discussions, our workshop will extend current understanding on how to best leverage existing metrics, like Word Error Rate, within the HCI design ecosystem, and also explore new innovations in speech AI measurement. Key outcomes of this workshop include: a research agenda for CHI community to guide and contribute to speech AI development, groundwork for new papers on speech AI measurement, and a diversity-centered benchmark suite for external evaluators.

@inproceedings{wenzel26_speech,

|

Teaching & Mentoring

If you're a TAMU student looking to get involved in research, send me an email atmariateleki@tamu.edu! Whether you have prior research experience

or are just starting out, I have a few spots each semester to mentor and collaborate

with students who have a passion for learning, a growth mindset, and who want

to contribute to impactful projects.

Awards

| (2022-2026) | Dr. Dionel Avilés ’53 and Dr. James Johnson ’67 Fellowship in Computer Science and Engineering |

| (Spring 2025) | Department of Computer Science & Engineering Travel Grant |

| (Spring 2024) | CRA-WP Grad Cohort for Women |

| (Spring 2024) | Department of Computer Science & Engineering Travel Grant |

| (2017-2021) | President's Endowed Scholarship |

| (2018) | Bertha & Samuel Martin Scholarship |

Invited Talks

Experience

|

RetailMeNot Software Engineering Intern Austin, TX May 2021 - August 2021 |

Used Amazon SageMaker and spaCy to get BERT embeddings for concatenated coupon titles and descriptions. Analyzed the relationship between each dimension of the BERT embeddings and uCTR using Spearman's correlation coefficient, and used principal component analysis to find dimensions with stronger correlations. Created a plan to evaluate these dimensions as possible features for the Ranker algorithm--which does store page coupon ranking--using offline analysis and A/B testing. Taught Data Science Guilds about neural networks, word embeddings, and spaCy. |

|

The Hi, How Are You Project Volunteer Austin, TX May 2020 - Dec 2020 |

Developed the “Friendly Frog” Alexa Skill with the organization at the beginning of the COVID-19 pandemic to promote mental health by reading uplifting Daniel Johnston lyrics and the organization’s “Happy Habits.” |

|

RetailMeNot Software Engineering Intern Austin, TX May 2020 - August 2020 |

Developed the “RetailMeNot DealFinder” Alexa Skill to help users activate cash back offers. Presented on Alexa Skill Development at the Data Science Sandbox with both Valassis and RetailMeNot teams. |

|

Texas A&M University Peer Teacher Dec 2018 - Dec 2019 |

Helped students with programming homework and answered conceptual questions by hosting office hours and assisting at lab sessions for CSCE 121 and 181. Created notes with exercises and examples to work through as a group during CSCE 121 reviews. |

|

Silicon Labs Applications Engineering Intern Austin, TX May 2019 - August 2019 |

Designed and implemented the Snooper library using pandas to (1) systemize IC bus traffic snooping (I2C, UART, SPI, etc.) across different snooping devices (Saleae, Beagle, etc.), and (2) translate the traffic to a human-readable form for debugging purposes. Responded to multiple tickets from customers using the library. |

|

The Y (YMCA) Afterschool Instructor Sep 2016 - July 2017 |

Taught multiple weekly classes at local elementary schools for the YMCA Afterschool program, and authored Lego Mindstorms Robotics and “Crazy Science” instruction manuals for the program. |